LastPass: Hackers targeted employee in failed deepfake CEO call

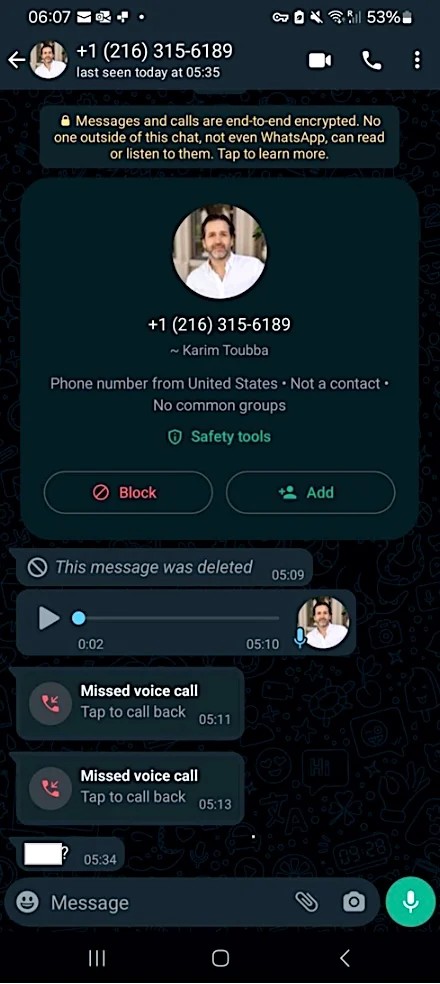

LastPass revealed this week that threat actors targeted one of its employees in a voice phishing attack, using deepfake audio to impersonate Karim Toubba, the company's Chief Executive Officer.

However, while 25% of people have been on the receiving end of an AI voice impersonation scam or know someone who has, according to a recent global study, the LastPass employee didn't fall for it because the attacker used WhatsApp, which is a very uncommon business channel.

"In our case, an employee received a series of calls, texts, and at least one voicemail featuring an audio deepfake from a threat actor impersonating our CEO via WhatsApp. As the attempted communication was outside of normal business communication channels and due to the employee's suspicion regarding the presence of many of the hallmarks of a social engineering attempt (such as forced urgency), our employee rightly ignored the messages and reported the incident to our internal security team so that we could take steps to both mitigate the threat and raise awareness of the tactic both internally and externally."

by LastPass intelligence analyst Mike Kosak

Kosak added the attack failed and had no impact on LastPass. However, the company still chose to share details of the incident to warn other companies that AI-generated deepfakes are already being used in executive impersonation fraud campaigns.

The deepfake audio used in this attack was likely generated using deepfake audio models trained on publicly available audio recordings of LastPass' CEO, likely this one available on YouTube.

This comes after two security breaches disclosed by LastPass in August 2022 and November 2022.

LastPass says its password management platform is now used by millions of users and over 100,000 businesses worldwide.

Deepfake attacks on the rise

LastPass' warning follows a U.S. Department of Health and Human Services (HHS) alert issued last week regarding cybercriminals targeting IT help desks using social engineering tactics and AI voice cloning tools to deceive their targets.

The use of audio deepfakes also allows threat actors to make it much harder to verify the caller's identity remotely, rendering attacks where they impersonate executives and company employees very hard to detect.

While the HHS shared advice specific to attacks targeting IT help desks of organizations in the health sector, the following also very much applies to CEO impersonation fraud attempts:

- Require callbacks to verify employees requesting password resets and new MFA devices.

- Monitor for suspicious ACH changes.

- Revalidate all users with access to payer websites.

- Consider in-person requests for sensitive matters.

- Require supervisors to verify requests.

- Train help desk staff to identify and report social engineering techniques and verify callers' identities.

In March 2021, the FBI also issued a Private Industry Notification (PIN) [PDF] cautioning that deepfakes—including AI-generated or manipulated audio, text, images, or video—were becoming increasingly sophisticated and would likely be widely employed by hackers in "cyber and foreign influence operations."

Additionally, Europol warned in April 2022 that deepfakes may soon become a tool that cybercriminal groups routinely use in CEO fraud, evidence tampering, and non-consensual pornography creation.

Comments